So apparently my VPS machine isn't up to snuff yet? I'm not sure where the problem is, but it's down for now, so I've removed the redirect (and placed one to here).

If Wil Wheaton can be in exile, so can I, by gum! Maybe it's best to think of this as the "Old World". That makes it sound more enjoyable, huh?

Anyway, it makes a great sort of backup. Sorry about the issues, I'm working on them now.

Extra special thanks to one person who went way above the call of duty in letting me know, and I really appreciate it. I'll post his name if he wants to fess up to it :-)

Friday, July 3, 2009

Welcome to the future!

If you're reading this via RSS reader, I invite you to come visit the new blog! I've got all sorts of enhancements available here, so come check it out!

Lets do an overview of what's new. First, instead of being hosted on blogger's servers, I've got a VPS for the site, and the domain name is www.standalone-sysadmin.com now. Since there are tons of links going to the old site, I've implemented an http meta redirect to a php script that I write. It parses the referrer information and sends the user to the correct page on this site.

The big RSS icon in the top right hand corner of the page links to the feedburner stream. If you subscribe via RSS (and as of today, that's 585 of you out there just at Google Reader), please update your RSS feed to http://feeds.feedburner.com/standalone-sysadmin/rWoU.

The "commentators" box on the right hand side examines user comments, checks the email addresses, and looks them up at Gravatar, the globally recognized avatar database. I've noticed that more and more sites seem to be using it, so if you haven't setup your email over there, maybe you should check into it.

Everything else should be pretty straight forward. I've enabled OpenID logins for those of you who want to use them. The only recurring issue is slowness on the machine. I've talked to the hosting people about that, and when a bigger server opens up, I'll migrate to that. The VPS has 512MB of RAM right now. If this becomes a major problem, I'll temporarily move the blog back to blogger, but I'm hoping that isn't going to be an issue. Let me know if you find it to be.

So that's it. Feel free to leave feedback! Thanks!

Lets do an overview of what's new. First, instead of being hosted on blogger's servers, I've got a VPS for the site, and the domain name is www.standalone-sysadmin.com now. Since there are tons of links going to the old site, I've implemented an http meta redirect to a php script that I write. It parses the referrer information and sends the user to the correct page on this site.

The big RSS icon in the top right hand corner of the page links to the feedburner stream. If you subscribe via RSS (and as of today, that's 585 of you out there just at Google Reader), please update your RSS feed to http://feeds.feedburner.com/standalone-sysadmin/rWoU.

The "commentators" box on the right hand side examines user comments, checks the email addresses, and looks them up at Gravatar, the globally recognized avatar database. I've noticed that more and more sites seem to be using it, so if you haven't setup your email over there, maybe you should check into it.

Everything else should be pretty straight forward. I've enabled OpenID logins for those of you who want to use them. The only recurring issue is slowness on the machine. I've talked to the hosting people about that, and when a bigger server opens up, I'll migrate to that. The VPS has 512MB of RAM right now. If this becomes a major problem, I'll temporarily move the blog back to blogger, but I'm hoping that isn't going to be an issue. Let me know if you find it to be.

So that's it. Feel free to leave feedback! Thanks!

Thursday, July 2, 2009

Update with the hiring and an upcoming blog update

A while back, I talked about hiring another administrator. That process is currently happening and progressing nicely.

If anyone reading the blog applied, thank you. If you didn't receive a call, it is probably because you were far more overqualified than we were looking for. It's a sign of the bad economy that we're having people with 20 years experience applying for junior positions. I hope this turns around for everyone's sake.

Also, even longer ago, I presented a survey which asked an optional open-ended question. What would you do to improve the blog. Well, I hope you're not too attached to how this blog looks right now, because some time over the weekend, it's going to change quite a bit. This new iteration will require you to update the URL for the RSS feed if you're a subscriber.

To facilitate an easier transition, I'm going to be continuing to publish articles here in addition to the new site, so RSS subscribers who haven't caught the news aren't left in the dark. You will automatically be redirected to the new site if you visit this address, though. My plan for it is to be seamless for people visiting, and nearly painless for subscribers. I have no doubt that you'll let me know how it affects you and if something isn't working.

Here's where the fun begins...

If anyone reading the blog applied, thank you. If you didn't receive a call, it is probably because you were far more overqualified than we were looking for. It's a sign of the bad economy that we're having people with 20 years experience applying for junior positions. I hope this turns around for everyone's sake.

Also, even longer ago, I presented a survey which asked an optional open-ended question. What would you do to improve the blog. Well, I hope you're not too attached to how this blog looks right now, because some time over the weekend, it's going to change quite a bit. This new iteration will require you to update the URL for the RSS feed if you're a subscriber.

To facilitate an easier transition, I'm going to be continuing to publish articles here in addition to the new site, so RSS subscribers who haven't caught the news aren't left in the dark. You will automatically be redirected to the new site if you visit this address, though. My plan for it is to be seamless for people visiting, and nearly painless for subscribers. I have no doubt that you'll let me know how it affects you and if something isn't working.

Here's where the fun begins...

Labels:

administrivia

Wednesday, July 1, 2009

New Article: Manage Stress Before It Kills You

My newest column is up at Simple Talk Exchange. It's called "Manage Stress Before it Kills You.

It starts out with a true-to-life story of something that happened to me one night. It was scary, but it did let me know that something was wrong. My advice is to manage your stress before it gets to this point, because it isn't an enjoyable experience.

Please make sure to vote up the article if you like it! Thanks!

It starts out with a true-to-life story of something that happened to me one night. It was scary, but it did let me know that something was wrong. My advice is to manage your stress before it gets to this point, because it isn't an enjoyable experience.

Please make sure to vote up the article if you like it! Thanks!

Tuesday, June 30, 2009

Fun with VMware ESXi

Day one of playing with bare metal hypervisors, and I'm already having a blast.

I decided to try ESXi first, since it was the closest relative to what I'm running right now.

Straight out of the box, I run into my first error. I'm installing on a Dell Poweredge 1950 server. The CD boots into an interesting initialization sequence. The screen turns a featureless black, and there are no details as to what is going on behind the scenes. The only indication that the machine isn't frozen is a slowly incrementing progress bar at the bottom. After around 20 minutes (I'm guessing the time it takes to read and decompress an entire installation CD into memory), the screen changes to a menu asking me to hit R if I want to repair, or Enter if I want to install. I want to install, so I hit Enter. Nothing happens, so I hit enter again. And again. And again. It takes a few more times before I realize that the "numlock" light is off. Curious, I hit numlock and it doesn't respond.

Awesome.

I unplug and replug the keyboard in. Nothing. Move it to the front port. Nothing. I reboot and come back to my desk to research. Apparently, I'm not alone. Those accounts are from 2008. I downloaded this CD an hour ago, and it's 3.5 U4 (the most current 3.5x release). It is supposed to have support on the PE1950, but if the keyboard doesn't even work, I have my doubts.

Lots of people have suggested using a PS2 keyboard as the accepted workaround, but in a similar tone to most of my problem/solution options, this server has no PS2 ports.

I'm downloading ESX v4 now. I'll update with how it goes, no doubt.

I decided to try ESXi first, since it was the closest relative to what I'm running right now.

Straight out of the box, I run into my first error. I'm installing on a Dell Poweredge 1950 server. The CD boots into an interesting initialization sequence. The screen turns a featureless black, and there are no details as to what is going on behind the scenes. The only indication that the machine isn't frozen is a slowly incrementing progress bar at the bottom. After around 20 minutes (I'm guessing the time it takes to read and decompress an entire installation CD into memory), the screen changes to a menu asking me to hit R if I want to repair, or Enter if I want to install. I want to install, so I hit Enter. Nothing happens, so I hit enter again. And again. And again. It takes a few more times before I realize that the "numlock" light is off. Curious, I hit numlock and it doesn't respond.

Awesome.

I unplug and replug the keyboard in. Nothing. Move it to the front port. Nothing. I reboot and come back to my desk to research. Apparently, I'm not alone. Those accounts are from 2008. I downloaded this CD an hour ago, and it's 3.5 U4 (the most current 3.5x release). It is supposed to have support on the PE1950, but if the keyboard doesn't even work, I have my doubts.

Lots of people have suggested using a PS2 keyboard as the accepted workaround, but in a similar tone to most of my problem/solution options, this server has no PS2 ports.

I'm downloading ESX v4 now. I'll update with how it goes, no doubt.

Labels:

esxi,

virtualization,

vmware

Monday, June 29, 2009

Encryption tools for Sysadmins

Every once in a while, someone will ask me what I use for keeping passwords securely. I tell them that I use password safe, which was reccommended to me when *I* asked the question.

Other times, people will ask for simple ways to encrypt or store files. If you're looking for something robust, cross platform, and full featured, you could do a lot worse than TrueCrypt. Essentially, it hooks into the operating system's kernel and allows it to mount entire encrypted volumes as if they were drives. It also has advanced security methods to hide volumes, so that if searched, no volumes would be found without knowing the proper key. In addition, it has a feature that can be valuable if you are seized and placed under duress: in addition to the "real" password, a 2nd can be setup to open another volume, so that your captors believe that you gave them the correct information. Unreal.

So you see that truecrypt is an amazing piece of software. For many things, it's definitely overkill. Instead, you just want something light, that will encrypt a file and that's it. In this case, Gnu Privacy Guard is probably your best bet. I use it in our company to send and receive client files over non secure transfer methods (FTP and the like). With proper Key Exchange, we can be absolutely sure that a file on our servers came from our clients, and vice versa. If you're running a Linux distribution, chances are good you've got GPG installed already. Windows and Mac users will have to get it, but it's absolutely worth it, and the knowledge of how public key encryption works is at the heart of everything from web certificates to ssh authentication. If you want to learn more about how to use it, Simple Help has a tutorial on it, covering the very basic usage. Once you're comfortable with that, check out the manual.

I'm sure I missed some fun ones, so make sure to suggest what you use!

Other times, people will ask for simple ways to encrypt or store files. If you're looking for something robust, cross platform, and full featured, you could do a lot worse than TrueCrypt. Essentially, it hooks into the operating system's kernel and allows it to mount entire encrypted volumes as if they were drives. It also has advanced security methods to hide volumes, so that if searched, no volumes would be found without knowing the proper key. In addition, it has a feature that can be valuable if you are seized and placed under duress: in addition to the "real" password, a 2nd can be setup to open another volume, so that your captors believe that you gave them the correct information. Unreal.

So you see that truecrypt is an amazing piece of software. For many things, it's definitely overkill. Instead, you just want something light, that will encrypt a file and that's it. In this case, Gnu Privacy Guard is probably your best bet. I use it in our company to send and receive client files over non secure transfer methods (FTP and the like). With proper Key Exchange, we can be absolutely sure that a file on our servers came from our clients, and vice versa. If you're running a Linux distribution, chances are good you've got GPG installed already. Windows and Mac users will have to get it, but it's absolutely worth it, and the knowledge of how public key encryption works is at the heart of everything from web certificates to ssh authentication. If you want to learn more about how to use it, Simple Help has a tutorial on it, covering the very basic usage. Once you're comfortable with that, check out the manual.

I'm sure I missed some fun ones, so make sure to suggest what you use!

Labels:

encryption,

security

Thursday, June 25, 2009

Enable Terminal Server on a remote machine

Well, sort of.

This is an old howto that I apparently missed. I really know so little about Windows administration that finding gems like this makes me really excited :-)

Anyway, it's possible to connect to a remote machine's registry, alter the data in it, then remotely reboot the machine so that it can come back up with the server running. That's pretty smooth!

Here are the details.

I know I'm missing tons more stuff like this. What are your favorites?

This is an old howto that I apparently missed. I really know so little about Windows administration that finding gems like this makes me really excited :-)

Anyway, it's possible to connect to a remote machine's registry, alter the data in it, then remotely reboot the machine so that it can come back up with the server running. That's pretty smooth!

Here are the details.

I know I'm missing tons more stuff like this. What are your favorites?

Labels:

remote management,

terminal server,

windows

Wednesday, June 24, 2009

Windows Desktop Automated Installations

Over the past couple of weeks, I've had the idea in the back of my mind to build an infrastructure for automated Windows installs, for my users' machines. I've been doing some research (including on ServerFault), and have created a list of software that seems to attempt to fill that niche.

First up is Norton Ghost. From what I can tell, it seems to be the standard image-creating software around. It's been around forever, and according to a slightly skeptical view, seems to be the equivalent of Linux's 'dd' command. It's a piece of commercial software that seems primarily Windows based, but according to the Wiki page supports ext2 and ext3. It does have advanced features, but it looks like you need one license per machine cloned (Experts-Exchange link: scroll to the bottom), and I'm not into spending that sort of money.

Speaking of not spending that sort of money, Acronis True Image has some amazing features. Larger enterprises should probably look into it if they aren't already using it. Just click the link and check the feature set. Nice!

Available for free (sort of) is Microsoft Deployment Services, courtesy of Windows 2008 Server. It's the redesigned version of Remote Installation Services in Server 2003. Word on the street is that it's going to be the recommended way to install Windows 7, winner of the "Most likely to be the next OS on my network when XP is finally unsupported" award. The downside is that I don't currently have any 2008 servers, nor do I plan on upgrading my AD infrastructure. I suppose I could use Remote Installation Services, but eventually I know that I'll upgrade, and then I'll be left learning the new paradigm anyway.

So lets examine some free opensource offerings.

It seems like the most commonly recommended software has been Clonezilla so far. Based on the Diskless Remote Boot in Linux (DRBL), along with half a dozen other free softwares, it seems to support most filesystems capable of being mounted under Linux (including LVM2-hosted filesystems). It comes in two major releases. Clonezilla Live, able to be booted from a CD/DVD/USB drive, and Clonezilla Server Edition, a dedicated image server. If I were going to implement it, I think I'd keep one of each around. They both sound pretty handy for different tasks.

Next up is FOG, the Free Opensource Ghost clone. I haven't come across a ton of documentation for it, but it sounds intriguing. Listening to Clonezilla -vs- FOG peaked my interest, and this is on my list to try. Feel free to drop feedback if you've used it.

Ghost4Linux exists. That's about all I've found. If you know anything about it, and it's good, let me know.

What I've been considering most heavily, Unattended seems very flexible and extensible. It seems to primarily consist of perl scripts, and instead of dealing with images, it automates installs. This has several advantages, mostly that instead of maintaining one image per each model of machine, I can save space by pointing an install to specific drivers necessary for an install, and keep one "base" set of packages.

As soon as I have time, I'm going to start implementing some of these, and I'll write more about them. If you have any experience with this stuff, I'd love to hear from you.

First up is Norton Ghost. From what I can tell, it seems to be the standard image-creating software around. It's been around forever, and according to a slightly skeptical view, seems to be the equivalent of Linux's 'dd' command. It's a piece of commercial software that seems primarily Windows based, but according to the Wiki page supports ext2 and ext3. It does have advanced features, but it looks like you need one license per machine cloned (Experts-Exchange link: scroll to the bottom), and I'm not into spending that sort of money.

Speaking of not spending that sort of money, Acronis True Image has some amazing features. Larger enterprises should probably look into it if they aren't already using it. Just click the link and check the feature set. Nice!

Available for free (sort of) is Microsoft Deployment Services, courtesy of Windows 2008 Server. It's the redesigned version of Remote Installation Services in Server 2003. Word on the street is that it's going to be the recommended way to install Windows 7, winner of the "Most likely to be the next OS on my network when XP is finally unsupported" award. The downside is that I don't currently have any 2008 servers, nor do I plan on upgrading my AD infrastructure. I suppose I could use Remote Installation Services, but eventually I know that I'll upgrade, and then I'll be left learning the new paradigm anyway.

So lets examine some free opensource offerings.

It seems like the most commonly recommended software has been Clonezilla so far. Based on the Diskless Remote Boot in Linux (DRBL), along with half a dozen other free softwares, it seems to support most filesystems capable of being mounted under Linux (including LVM2-hosted filesystems). It comes in two major releases. Clonezilla Live, able to be booted from a CD/DVD/USB drive, and Clonezilla Server Edition, a dedicated image server. If I were going to implement it, I think I'd keep one of each around. They both sound pretty handy for different tasks.

Next up is FOG, the Free Opensource Ghost clone. I haven't come across a ton of documentation for it, but it sounds intriguing. Listening to Clonezilla -vs- FOG peaked my interest, and this is on my list to try. Feel free to drop feedback if you've used it.

Ghost4Linux exists. That's about all I've found. If you know anything about it, and it's good, let me know.

What I've been considering most heavily, Unattended seems very flexible and extensible. It seems to primarily consist of perl scripts, and instead of dealing with images, it automates installs. This has several advantages, mostly that instead of maintaining one image per each model of machine, I can save space by pointing an install to specific drivers necessary for an install, and keep one "base" set of packages.

As soon as I have time, I'm going to start implementing some of these, and I'll write more about them. If you have any experience with this stuff, I'd love to hear from you.

Labels:

automation,

imaging,

installation,

windows

Monday, June 22, 2009

Examine SSL certificate on the command line

This is more for my documentation than anyone elses, but you might find it useful.

To examine an SSL certificate (for use on a secured web server) from the commandline, use this command:

openssl x509 -in filename.crt -noout -text

To examine an SSL certificate (for use on a secured web server) from the commandline, use this command:

openssl x509 -in filename.crt -noout -text

Tuesday, June 16, 2009

More Cable Management

or "I typed a lot on serverfault, I wonder if I can get a blog entry out of it"

Cable management is one of those things that you might be able to read about, but you will never really get the hang of it until you go out and do it. And it takes practice. Good cable management takes a lot of planning, too. You don't get great results if you just throw together a bunch of cable on a rack and call it a day. You've got to plan your runs, order (or create) the right kind of cables and cable management hardware that you need, and it's got to be documented. Only after the documentation is done is the cable job complete (if it even is, then).

When someone asked about Rack Cable Management, I typed out a few of my thoughts, and then kept typing. I've basically pasted it below, because I thought that some of you all might be interested as well.

And just for the record, I've talked about cable management before. Heck, I even did a HOWTO on it a long time ago.

Label each cable

I have a brother P-Touch labeler that I use. Each cable gets a label on both ends. This is because if I unplug something from a switch, I want to know where to plug it back into, and vice versa on the server end.

There are two methods that you can use to label your cables with a generic labeler. You can run the label along the cable, so that it can be read easily, or you can wrap it around the cable so that it meets itself and looks like a tag. The former is easier to read, the latter is either harder to read or uses twice as much label since you type the word twice to make sure it's read. Long labels on mine get the "along the cable" treatment, and shorter ones get the tag.

You can also buy a specific cable labeler which provides plastic sleeves. I've never used it, so I can't offer any advice.

Color code your cables

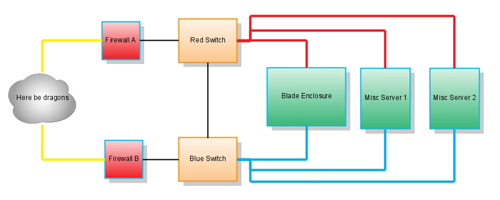

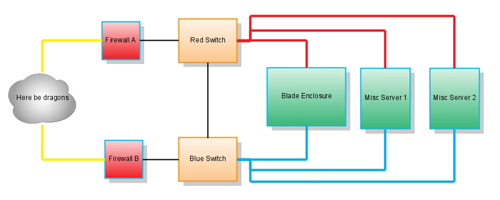

I run each machine with bonded network cards. This means that I'm using both NICs in each server, and they go to different switches. I have a red switch and a blue switch. All of the eth0's go to red switch using red cables (and the cables are run to the right, and all eth1's go to the blue switch using blue cables (and the cables are run to the left). My network uplink cables are an off color, like yellow, so that they stand out.

In addition, my racks have redundant power. I've got a vertical PDU on each side. The power cables plugged into the right side all have a ring of electrical tape matching the color of the side, again, red for right, blue for left. This makes sure that I don't overload the circuit accidentally if things go to hell in a hurry.

Buy your cables

This may ruffle some feathers. Some people say you should cut cables exactly to length so that there is no excess. I say "I'm not perfect, and some of my crimp jobs may not last as long as molded ends", and I don't want to find out at 3 in the morning some day in the future. So I buy in bulk. When I'm first planning a rack build, I determine where, in relation to the switches, my equipment will be. Then I buy cables in groups based on that distance.

When the time comes for cable management, I work with bundles of cable, grouping them by physical proximity (which also groups them by length, since I planned this out beforehand). I use velcro zip ties to bind the cables together, and also to make larger groups out of smaller bundles. Don't use plastic zip ties on anything that you could see yourself replacing. Even if they re-open, the plastic will eventually wear down and not latch any more.

Keep power cables as far from ethernet cables as possible

Power cables, especially clumps of power cables, cause ElectroMagnetic Interference (EMI aka radio frequency interference (or RFI)) on any surrounding cables, including CAT-* cables (unless they're shielded, but if you're using STP cables in your rack, you're probably doing it wrong). Run your power cables away from the CAT5/6. And if you must bring them close, try to do it at right angles.

Cable management is one of those things that you might be able to read about, but you will never really get the hang of it until you go out and do it. And it takes practice. Good cable management takes a lot of planning, too. You don't get great results if you just throw together a bunch of cable on a rack and call it a day. You've got to plan your runs, order (or create) the right kind of cables and cable management hardware that you need, and it's got to be documented. Only after the documentation is done is the cable job complete (if it even is, then).

When someone asked about Rack Cable Management, I typed out a few of my thoughts, and then kept typing. I've basically pasted it below, because I thought that some of you all might be interested as well.

And just for the record, I've talked about cable management before. Heck, I even did a HOWTO on it a long time ago.

Label each cable

I have a brother P-Touch labeler that I use. Each cable gets a label on both ends. This is because if I unplug something from a switch, I want to know where to plug it back into, and vice versa on the server end.

There are two methods that you can use to label your cables with a generic labeler. You can run the label along the cable, so that it can be read easily, or you can wrap it around the cable so that it meets itself and looks like a tag. The former is easier to read, the latter is either harder to read or uses twice as much label since you type the word twice to make sure it's read. Long labels on mine get the "along the cable" treatment, and shorter ones get the tag.

You can also buy a specific cable labeler which provides plastic sleeves. I've never used it, so I can't offer any advice.

Color code your cables

I run each machine with bonded network cards. This means that I'm using both NICs in each server, and they go to different switches. I have a red switch and a blue switch. All of the eth0's go to red switch using red cables (and the cables are run to the right, and all eth1's go to the blue switch using blue cables (and the cables are run to the left). My network uplink cables are an off color, like yellow, so that they stand out.

In addition, my racks have redundant power. I've got a vertical PDU on each side. The power cables plugged into the right side all have a ring of electrical tape matching the color of the side, again, red for right, blue for left. This makes sure that I don't overload the circuit accidentally if things go to hell in a hurry.

Buy your cables

This may ruffle some feathers. Some people say you should cut cables exactly to length so that there is no excess. I say "I'm not perfect, and some of my crimp jobs may not last as long as molded ends", and I don't want to find out at 3 in the morning some day in the future. So I buy in bulk. When I'm first planning a rack build, I determine where, in relation to the switches, my equipment will be. Then I buy cables in groups based on that distance.

When the time comes for cable management, I work with bundles of cable, grouping them by physical proximity (which also groups them by length, since I planned this out beforehand). I use velcro zip ties to bind the cables together, and also to make larger groups out of smaller bundles. Don't use plastic zip ties on anything that you could see yourself replacing. Even if they re-open, the plastic will eventually wear down and not latch any more.

Keep power cables as far from ethernet cables as possible

Power cables, especially clumps of power cables, cause ElectroMagnetic Interference (EMI aka radio frequency interference (or RFI)) on any surrounding cables, including CAT-* cables (unless they're shielded, but if you're using STP cables in your rack, you're probably doing it wrong). Run your power cables away from the CAT5/6. And if you must bring them close, try to do it at right angles.

Monday, June 15, 2009

The Backup Policy: Databases

It's getting time to revisit my old friend, the backup policy. My boss and I reviewed it last week before he left, and I'm going to spend some time refining the implementation of it.

Essentially, our company, like most, operates on data. The backup policy is designed to ensure that no piece of essential data is lost or unusable, and we try to accomplish that through various backups and archives (read Michael Janke's excellent guest blog entry, "Backups Suck", for more information).

The first thing listed in our backup policy is our Oracle database. It's our primary data store, and at 350GB, a real pain in the butt to transfer around. We've got our primary oracle instance at the primary site (duh?), and it's producing archive logs. That means anytime there's a change in the database, a log file gets written to. We then ship those logs to three machines that are running in "standby mode" where they are replayed to bring the database up to date.

The first standby database is also at the primary site. This enables us to switch over to another database server in an instant if the primary machine crashes with an OS problem or a hardware problem, or something similar that hasn't been corrupting the database for a significant time.

The second standby database is at the backup site. We would move to it in the event that both database machines crash at the primary site (not likely), or if the primary site is rendered unusable for some other reason (slightly more likely). Ideally, we'd have a very fast link (100Mb/s+) between the two sites, but this isn't the case currently, although a link like that is planned in the future.

The third standby database is on the backup server. The backup server is at a 3rd location and has 16-tape library attached to it. In addition to lots of other data that I'll cover in later articles, the Oracle database and historic transaction logs get spooled here so that we can create archives of the database.

These archives would be useful if we found out that several months ago, an unnoticed change went through the database, like a table getting dropped, or some kind of slight corruption that wouldn't bring attention to itself. With archives, we can go back and find out how long it has been that way, or even recover data from before the table was dropped.

Every Sunday, the second standby database is shut down and copied to a test database. After it is copied, it's activated on the test database machine, so that our operations people can test experimental software and data on it.

In addition, a second testing environment is going to be launched at the third site, home of the backup machine. This testing environment will be fed in a similar manner from the third standby database.

Being able to activate these backups help to ensure that our standby databases are a viable recovery mechanism.

The policy states that every Sunday an image will be created from the standby instance. This image will be paired with the archive logs from the next week (Mon-Sat) and written to tape the following Sunday, after which a new image will be created. Two images will be kept live on disk, and another two will be kept in compressed form (It's faster to uncompress a disk than read it from tape).

In the future, I'd like to build in a method to regularly restore a DB image from tape, activate it, and run queries against it, similar to the testing environments. This would extend our "known good" area from the database images to include our backup media.

So that's what I'm doing to prevent data loss from the Oracle DB. I welcome any questions, and I especially welcome any suggestions that would improve the policy. Thanks!

Essentially, our company, like most, operates on data. The backup policy is designed to ensure that no piece of essential data is lost or unusable, and we try to accomplish that through various backups and archives (read Michael Janke's excellent guest blog entry, "Backups Suck", for more information).

The first thing listed in our backup policy is our Oracle database. It's our primary data store, and at 350GB, a real pain in the butt to transfer around. We've got our primary oracle instance at the primary site (duh?), and it's producing archive logs. That means anytime there's a change in the database, a log file gets written to. We then ship those logs to three machines that are running in "standby mode" where they are replayed to bring the database up to date.

The first standby database is also at the primary site. This enables us to switch over to another database server in an instant if the primary machine crashes with an OS problem or a hardware problem, or something similar that hasn't been corrupting the database for a significant time.

The second standby database is at the backup site. We would move to it in the event that both database machines crash at the primary site (not likely), or if the primary site is rendered unusable for some other reason (slightly more likely). Ideally, we'd have a very fast link (100Mb/s+) between the two sites, but this isn't the case currently, although a link like that is planned in the future.

The third standby database is on the backup server. The backup server is at a 3rd location and has 16-tape library attached to it. In addition to lots of other data that I'll cover in later articles, the Oracle database and historic transaction logs get spooled here so that we can create archives of the database.

These archives would be useful if we found out that several months ago, an unnoticed change went through the database, like a table getting dropped, or some kind of slight corruption that wouldn't bring attention to itself. With archives, we can go back and find out how long it has been that way, or even recover data from before the table was dropped.

Every Sunday, the second standby database is shut down and copied to a test database. After it is copied, it's activated on the test database machine, so that our operations people can test experimental software and data on it.

In addition, a second testing environment is going to be launched at the third site, home of the backup machine. This testing environment will be fed in a similar manner from the third standby database.

Being able to activate these backups help to ensure that our standby databases are a viable recovery mechanism.

The policy states that every Sunday an image will be created from the standby instance. This image will be paired with the archive logs from the next week (Mon-Sat) and written to tape the following Sunday, after which a new image will be created. Two images will be kept live on disk, and another two will be kept in compressed form (It's faster to uncompress a disk than read it from tape).

In the future, I'd like to build in a method to regularly restore a DB image from tape, activate it, and run queries against it, similar to the testing environments. This would extend our "known good" area from the database images to include our backup media.

So that's what I'm doing to prevent data loss from the Oracle DB. I welcome any questions, and I especially welcome any suggestions that would improve the policy. Thanks!

Friday, June 12, 2009

Link What is LDAP?

Too busy to really do a big update, but if you've ever wanted to learn what LDAP was, but didn't know who to ask, Sysadmin1138 has a great LDAP WhatIs (that's opposed to a HowTo) on his blog today.

Thursday, June 11, 2009

Today, on a very special Standalone Sysadmin...

I named this blog Standalone Sysadmin for a very good reason. Since 2003 or so, I have very much been a standalone sysadmin. I have worked on networks and infrastructures where, in some cases, the only single point of failure was me. This is not an ideal situation. My bus factor is through the roof.

I have previously complained about the amount of stress that I have at my current position pretty frequently on here (too frequently, really), and I've felt for a long time that it was caused by being the sole point of contact for any IT issues in the organization. The 2008 IT (dis)Satisfaction Survey backed up my beliefs.

After some extended discussions with management about my predicament, they have agreed to help me out by hiring a junior administrator to assist me in keeping the infrastructure together. Horray!

So, here is the job description. We're posting this on Craigslist and Monster. The emphasis is on junior administrator, because of the lack of money we have to put toward the role at the moment. Chances are that if you're already an administrator and you're reading this blog, you are probably more advanced than we're looking for, but maybe you know someone who is smart, young, and wants to get into IT administration, and located somewhere around Berkeley Heights, NJ. It might not be a lot of money, but it's definitely a learning experience, and whoever gets the job will get to play with cool toys ;-)

Here's the original post from Craigslist:

I have previously complained about the amount of stress that I have at my current position pretty frequently on here (too frequently, really), and I've felt for a long time that it was caused by being the sole point of contact for any IT issues in the organization. The 2008 IT (dis)Satisfaction Survey backed up my beliefs.

After some extended discussions with management about my predicament, they have agreed to help me out by hiring a junior administrator to assist me in keeping the infrastructure together. Horray!

So, here is the job description. We're posting this on Craigslist and Monster. The emphasis is on junior administrator, because of the lack of money we have to put toward the role at the moment. Chances are that if you're already an administrator and you're reading this blog, you are probably more advanced than we're looking for, but maybe you know someone who is smart, young, and wants to get into IT administration, and located somewhere around Berkeley Heights, NJ. It might not be a lot of money, but it's definitely a learning experience, and whoever gets the job will get to play with cool toys ;-)

Here's the original post from Craigslist:

Small, growing, and dynamic company is seeking a junior administrator to enhance the sysadmin team. Responsibilities include desktop support, low level server and network administration, and performing on-call rotation with the lead administrator.

The ideal candidate will be an experienced Linux user who has performed some level of enterprise Linux administration (CentOS/RedHat/Slackware preferred). A history of technical support of Windows XP and Mac OS X is valuable, although the amount of remote support is limited. A familiarity with Windows Server 2003 is a plus.

The most important characteristic of our ideal candidate is the ability to learn quickly, think on his/her feet, and adapt to new situations.

Wednesday, June 10, 2009

Opsview->Nagios - Is simpler better?

I'm on the cusp of implementing my new Nagios install at the backup site. It's going to be very similar in terms of configuration to the primary site. At the same time, I'm looking at alternate configuration methods, mostly to see what's out there and available.

Since the actual configuration of Nagios is...labyrinthine, I was looking to see if an effective GUI had been created since the last time I looked. I searched on ServerFault and found that someone had already asked the question for me. The majority of the votes had been thrown toward Opsview, a pretty decent looking interface with lots of the configuration directives available via interface elements. Someone obviously put a lot of work into this, from the screen shots.

It turns out that opsview has a VM image available for testing, so I downloaded it and tried it out. I have to say, the interface is as slick as the screenshots make it out to be. Very smooth experience, with none of the "check the config, find the offending line, fix the typo, check the config..." that editing Nagios configurations by hand tend to produce.

As I was clicking and configuring, I thought back to Michael Janke's excellent post, Ad Hoc -vs- Structured Systems Management (really, go read it. I honestly believe it's his magnum opus). One of the most important lessons is that to maintain the integrity and homogeneity of configuration, you don't click and configure by hand, you use scripts to perform repeatable actions, becayse they're infinitely more accurate than a human clicking and typing.

The ease of access provided by Opsview is tempting, and I can't say that I don't trust it, but I can say that I don't trust myself to click the right boxes all the time. My scripts won't do that. Therefore, I'm going to continue to use my scripts.

Remember, if you can script it, script it. If you can't script it, make a checklist.

Since the actual configuration of Nagios is...labyrinthine, I was looking to see if an effective GUI had been created since the last time I looked. I searched on ServerFault and found that someone had already asked the question for me. The majority of the votes had been thrown toward Opsview, a pretty decent looking interface with lots of the configuration directives available via interface elements. Someone obviously put a lot of work into this, from the screen shots.

It turns out that opsview has a VM image available for testing, so I downloaded it and tried it out. I have to say, the interface is as slick as the screenshots make it out to be. Very smooth experience, with none of the "check the config, find the offending line, fix the typo, check the config..." that editing Nagios configurations by hand tend to produce.

As I was clicking and configuring, I thought back to Michael Janke's excellent post, Ad Hoc -vs- Structured Systems Management (really, go read it. I honestly believe it's his magnum opus). One of the most important lessons is that to maintain the integrity and homogeneity of configuration, you don't click and configure by hand, you use scripts to perform repeatable actions, becayse they're infinitely more accurate than a human clicking and typing.

The ease of access provided by Opsview is tempting, and I can't say that I don't trust it, but I can say that I don't trust myself to click the right boxes all the time. My scripts won't do that. Therefore, I'm going to continue to use my scripts.

Remember, if you can script it, script it. If you can't script it, make a checklist.

Monday, June 8, 2009

Authenticating OpenBSD against Active Directory

I frequent ServerFault when I have spare time, and I found this post excellent today.

It's a step-by-step guide to authenticating OpenBSD against Active Directory.

Great set of instructions, so if you have OpenBSD in a mixed environment, take a look at this.

It's a step-by-step guide to authenticating OpenBSD against Active Directory.

Great set of instructions, so if you have OpenBSD in a mixed environment, take a look at this.

Labels:

active directory,

openbsd

Ah, the pitter patter of new equipment....

Alright, actually really old equipment.

Today my boss is bringing in all of the equipment from the old backup site. There are some fairly heavy duty pieces of equipment, really. I have no idea where I'm going to put it or what I'll do with it all once I get it where it's going, but it's nice to have some spare kit laying around.

Some of it is going to get earmarked for the new tech stuff that I want to learn. Some of the machines have enough processor and RAM that I can make them ESXi hosts, and Hyper-V hosts, since I really do want to try that as well.

There's a 1.6TB external storage array coming on which I'll probably setup Openfiler. It should be fine as a playground for booting VMs over the SAN.

No, the real problem is going to figure out who to cool the machine closet, at this point.

Our building (an 8 floor office building in suburban New Jersey) turns off the air conditioning at 6pm and on the weekends. Normally, this isn't a problem for me, since I've really only got 4 servers and an XRaid here. The additional machines that I'd like to run will cause a significant issue with the ambient temperature, and I'm going to have to figure something out.

I do have a small portable AC unit which I believe will process enough BTUs to take care of it, but I'll have to get the numbers to back that up, and even then I'll have to figure out how to vent it so that I'm not breaking any codes. Definitely have to do research on that.

So there's my next couple of days.

Today my boss is bringing in all of the equipment from the old backup site. There are some fairly heavy duty pieces of equipment, really. I have no idea where I'm going to put it or what I'll do with it all once I get it where it's going, but it's nice to have some spare kit laying around.

Some of it is going to get earmarked for the new tech stuff that I want to learn. Some of the machines have enough processor and RAM that I can make them ESXi hosts, and Hyper-V hosts, since I really do want to try that as well.

There's a 1.6TB external storage array coming on which I'll probably setup Openfiler. It should be fine as a playground for booting VMs over the SAN.

No, the real problem is going to figure out who to cool the machine closet, at this point.

Our building (an 8 floor office building in suburban New Jersey) turns off the air conditioning at 6pm and on the weekends. Normally, this isn't a problem for me, since I've really only got 4 servers and an XRaid here. The additional machines that I'd like to run will cause a significant issue with the ambient temperature, and I'm going to have to figure something out.

I do have a small portable AC unit which I believe will process enough BTUs to take care of it, but I'll have to get the numbers to back that up, and even then I'll have to figure out how to vent it so that I'm not breaking any codes. Definitely have to do research on that.

So there's my next couple of days.

Labels:

server room

Friday, June 5, 2009

General update and a long weekend ahead

After a week of wrestling with CDW and EMC, two weeks of fighting the storage array, and coming up with an ad hoc environment in something like 2 hours, I've had a rough go of this whole backup-site-activation thing.

The latest wrinkle has been that although EMC shipped us the storage processor, the burned CD with an updated FlareOS was corrupt. You would think, "oh, just download it from the website". At least, that's what I thought. But no. Apparently I'm not special enough to get into that section or something, so I called support, explained the situation, and they told me in their most understanding voice that I had to talk to my sales contact. /sigh

So I called my CDW rep, explained the situation, and he said that he'd get right on it! Excellent. That was, I believe, Tuesday, a bit after the 2nd blog entry. Well, yesterday at 4:30pm he told me that he finally talked to the right people at EMC, and that they'd ship the CD out to me so that I would have it this morning. My reaction might be described as "cautiously optimistic".

Could it be that I finally get to install the 2nd storage processor? Maybe! If I do, it's going to make for a long, long weekend. The EMC docs say that the installation itself takes 6 hours with the software install and reinitialization of the processors. If that last sentence sounds ominous to you, too, it really just means that the software on the controllers gets erased and reinstalled, not the data on the SAN. At least that's what they tell me. I'm going to be extremely unhappy if that's the case.

Tune in next week for the next exciting installment of "How can Matt be screwed by his own ignorance"!

The latest wrinkle has been that although EMC shipped us the storage processor, the burned CD with an updated FlareOS was corrupt. You would think, "oh, just download it from the website". At least, that's what I thought. But no. Apparently I'm not special enough to get into that section or something, so I called support, explained the situation, and they told me in their most understanding voice that I had to talk to my sales contact. /sigh

So I called my CDW rep, explained the situation, and he said that he'd get right on it! Excellent. That was, I believe, Tuesday, a bit after the 2nd blog entry. Well, yesterday at 4:30pm he told me that he finally talked to the right people at EMC, and that they'd ship the CD out to me so that I would have it this morning. My reaction might be described as "cautiously optimistic".

Could it be that I finally get to install the 2nd storage processor? Maybe! If I do, it's going to make for a long, long weekend. The EMC docs say that the installation itself takes 6 hours with the software install and reinitialization of the processors. If that last sentence sounds ominous to you, too, it really just means that the software on the controllers gets erased and reinstalled, not the data on the SAN. At least that's what they tell me. I'm going to be extremely unhappy if that's the case.

Tune in next week for the next exciting installment of "How can Matt be screwed by his own ignorance"!

Thursday, June 4, 2009

I am such a dork

Yes, that was my real reputation score when I logged into serverfault.com this evening. The masses agree :-)

Future Tech I want to try out

In the near future, I may be allowed to have a little more quality time with my infrastructure, so when that happens, I want to be able to hit the ground running. To that end, I wanted to enumerate some of the technologies I want to be trying, since I know lots of you really like cool projects.

How about you? If you had the time, what would you want to spend some time learning?

- Desktop/Laptop Management

- DRBD

- Puppet

- ZFS

- OpenSolaris

I want to work on centrally managing my users' machines. I already mentioned rolling out machine upgrades in flights. That way all I can distribute all machines pre-configured, domain authenticated, really take advantage of some microsoft-y technologies like Group Policy object to install any additional software on the fly. I want to do network-mapped home directories, as well, which I can only do on those users who have machines which have been added to the domain. I'd also like a little more full-featured computer management solution. I'm really sort of leaning towards (at least) trying Admin Arsenal, who did a guest blog spot last week. I'll have to do some more evaluating and try some test runs to see how it goes.

DRBD just sounds like a cool technology. Essentially you create a clustered filesystem, even though both machines aren't connected by anything other than a network. The scheme is called "shared nothing", and from what I have read, the filesystem is synced on a block level over the network. I can definitely see how it would be valuable, but I have lots of questions about what happens during network outages and the like. Ideally I would be able to setup a lab and go at it.

Puppet was the darling of the configuration management world for a long time. According to the webpage, it translates IT policy into configurations. It sounds like alchemy, but I'm willing to give it a shot, since so many people recommend it so highly. Speaking of people recommending something highly....

Somewhere on the hierarchy of great things, this is reputedly somewhere between sliced bread and..well, pretty much whatever is better than ZFS. That's a long list, no doubt, but reports are fuzzy on where bacon stands on the scale. In any event, if you've recently asked a Solaris user what filesystem is best for...pretty much anything, chances are good that they've recommended ZFS. If you've offered any resistance at all, you've probably heard echoes of "But....snapshots! Copy on write! 16 exibytes!". I suspect that its allure would probably lessen if it were actually available on Linux instead of being implemented in FUSE, but that's probably sour grapes. And in order to actually try it, I'm going to need to try...

All the fun of old school Unix without any of the crappy gnu software making us soft and weak. OpenSolaris became available when Sun released the source to Solaris, and a community sprung up around it. Learning (Open)Solaris is actually pretty handy since it runs some pretty large scale hardware and apparently there's some really nice filesystem for it or something that everyone is talking about. I don't know, but I'd like to give it a shot.

How about you? If you had the time, what would you want to spend some time learning?

Labels:

learning,

technology

Wednesday, June 3, 2009

Switching from piecemeal machines to leases

I've got a small number of users for the number of servers I manage. Right now, there's around 15 users in the company, and nearly all of them have laptops. We currently run an astonishing array of different models, each bought sometime in the previous four years. Because of this, no two are alike, and support is a nightmare.

I want to switch from the process we have in place now to a lease based plan, where my users are upgraded in "flights", so to speak. The benefits will be tremendous. I'll be able to roll out a standard image instead of wondering who has what version of what software, issues will be much easier to debug, and I can conduct thorough tests before rollout.

My problem is that I've never done leases nor planned rollouts like this. What advice would you give to someone who is just doing this for the first time?

See the related question on serverfault, as well!

I want to switch from the process we have in place now to a lease based plan, where my users are upgraded in "flights", so to speak. The benefits will be tremendous. I'll be able to roll out a standard image instead of wondering who has what version of what software, issues will be much easier to debug, and I can conduct thorough tests before rollout.

My problem is that I've never done leases nor planned rollouts like this. What advice would you give to someone who is just doing this for the first time?

See the related question on serverfault, as well!

Tuesday, June 2, 2009

Update: The storage gods heard my lament

and delivered my extra storage processor! Praise be to EMC. Or something like that.

Now to figure out where in the rack to jam the batteries ;-)

Now to figure out where in the rack to jam the batteries ;-)

The god of storage hates me, I know it

It seems like storage and I never get along. There's always some difficulty somewhere. It's always that I don't have enough, or I don't have enough where I need it, and there's always the occasional sorry-we-sold-you-a-single-controller followed by I'll-overnight-you-another-one which appears to be concluded by sorry-it-won't-be-there-until-next-week. /sigh

So yes, looking back at my blog's RSS feed, it was Wednesday of last week that I discovered the problem was the lack of a 2nd storage controller, and it was that same day that we ordered another controller. We asked for it to be overnighted. Apparently overnight is 6 days later, because it should come today. I mean, theoretically, it might not, but hey, I'm an optimist. Really.

Assuming that it does come today, I'm driving to Philadelphia to install it into the chassis. If it doesn't come, I'm driving to Philadelphia to install another server into the rack, because we promised operations that they'd have a working environment by Wednesday, then I'm going again whenever the part comes.

In almost-offtopic news, I am quickly becoming a proponent of the "skip a rack unit between equipment" school of rack management. You see, there are people like me who shove all of the equipment together so that they can maintain a chunk of extra free space in the rack in case something big comes along. Then there are people who say that airflow and heat dissipation are no good when the servers are like that, so they leave one rack unit between their equipment.

I've got blades, so skipping a RU wouldn't do much for my heat dissipation, but my 2nd processor kit is coming with a 1u pair of battery backups for the storage array and I REALLY wish that I hadn't put the array on the bottom of the rack and left the nearest free space about 15 units above it. I'm going to have to do some rearranging, and I'm not sure what I can move yet.

So yes, looking back at my blog's RSS feed, it was Wednesday of last week that I discovered the problem was the lack of a 2nd storage controller, and it was that same day that we ordered another controller. We asked for it to be overnighted. Apparently overnight is 6 days later, because it should come today. I mean, theoretically, it might not, but hey, I'm an optimist. Really.

Assuming that it does come today, I'm driving to Philadelphia to install it into the chassis. If it doesn't come, I'm driving to Philadelphia to install another server into the rack, because we promised operations that they'd have a working environment by Wednesday, then I'm going again whenever the part comes.

In almost-offtopic news, I am quickly becoming a proponent of the "skip a rack unit between equipment" school of rack management. You see, there are people like me who shove all of the equipment together so that they can maintain a chunk of extra free space in the rack in case something big comes along. Then there are people who say that airflow and heat dissipation are no good when the servers are like that, so they leave one rack unit between their equipment.

I've got blades, so skipping a RU wouldn't do much for my heat dissipation, but my 2nd processor kit is coming with a 1u pair of battery backups for the storage array and I REALLY wish that I hadn't put the array on the bottom of the rack and left the nearest free space about 15 units above it. I'm going to have to do some rearranging, and I'm not sure what I can move yet.

Friday, May 29, 2009

Outstanding new sysadmin resource

I always like posting cool sites and resources that I find, and man, today's is no exception.

I'm willing to bet a bunch of you have already heard of it and are probably participating. It just got out of beta the other day, and it's live for new members. It's called Server Fault, created by the same people who did Stack Overflow.

The general idea is that a sysadmin asks a question to the group. People answer the question in the thread, and the question (and answers) get voted up and down. Think of it like Reddit with a signal to noise ratio of infinity.

I've been active on there, checking it out, and while it's frustrating in the beginning (you can't do anything but ask and answer questions, and in your answers you can't even include links. As your questions and answers get voted up, you receive "reputation", and as your reputation improves, you get more abilities on the site. Like an RPG or something, I guess. Check the FAQ for more details.

The absolute best part is that you can learn more and more and more, all the time. I can't tell you how many questions I've seen where I thought "I've always sort of wondered that, too", and I just never took the time to research it. *click*

It's outstanding, and as a technical resource, probably unparalleled in the sysadmin world. Let me know what you think of it (and post a link to your account, if you'd like. Mine is here).

I'm willing to bet a bunch of you have already heard of it and are probably participating. It just got out of beta the other day, and it's live for new members. It's called Server Fault, created by the same people who did Stack Overflow.

The general idea is that a sysadmin asks a question to the group. People answer the question in the thread, and the question (and answers) get voted up and down. Think of it like Reddit with a signal to noise ratio of infinity.

I've been active on there, checking it out, and while it's frustrating in the beginning (you can't do anything but ask and answer questions, and in your answers you can't even include links. As your questions and answers get voted up, you receive "reputation", and as your reputation improves, you get more abilities on the site. Like an RPG or something, I guess. Check the FAQ for more details.

The absolute best part is that you can learn more and more and more, all the time. I can't tell you how many questions I've seen where I thought "I've always sort of wondered that, too", and I just never took the time to research it. *click*

It's outstanding, and as a technical resource, probably unparalleled in the sysadmin world. Let me know what you think of it (and post a link to your account, if you'd like. Mine is here).

Thursday, May 28, 2009

Software Deployment in Windows, courtesy of Admin Arsenal

One of the blogs that I read frequently is Admin Arsenal. To be honest, they're really the only commercial/corporate blog that I follow, because it's about many aspects of Windows administration, and doesn't just focus on their product.

Shawn Anderson, one of the guys who works there (and a frequent reader here on Standalone Sysadmin), took me up on my offer of hosting guest blog spots, and asked if I would host something written by the Admin Arsenal staff. I agreed, under the condition that the entry wasn't a commercial disguised as a blog entry. Of course their product is mentioned in this entry, but I don't feel that it is over the top or out of place.

The topic we discussed was remote software installation on Windows, something that has always seemed like black magic to me, someone who has no Windows background, and I figured it would be something that many of you would be interested in as well.

In the interest of full disclosure, I should say that I am not getting paid or reimbursed in any way for this blog entry. If you feel that allowing companies (even companies with blogs that I enjoy) submit guest blogs, say so in the comments. In the end, this is ultimately my blog, but I'm not so stubborn as to not listen to wise counsel.

Let me just reiterate here that anyone who has a topic of interest and wants to do a guest blog is welcome to drop me a line. The chances are great that I'll be very happy to host your work, and that many people would love to read it.

Without further ado, here's the guest entry from Admin Arsenal!

Ren McCormack says that Ecclesiastes assures us that "there is a time for every purpose under heaven. A time to laugh (upgrading to Windows 7), a time to weep (working with Vista), a time to mourn (saying goodbye to XP) and there is a time to dance." If you haven't seen Footloose then you have a homework assignment. Go rent it. Now.

OK, 80's movie nostalgia aside, let's talk about the "dance". Deployment. Almost every system admin knows the pain of having to deploy software to dozens or hundreds or even thousands of systems. Purchasing deployment tools can get very pricey and learning how to use the new tools can be overwhelming especially if you are new to the world of Software Deployment. Here are a few tips to help you in your Software Deployment needs.

Group Policy

Deploying software via Group Policy is relatively easy and has some serious benefits. If you have software that needs to "follow" a user then Group Policy is the way to go. As particular users move from computer to computer you can be certain that the individual software needed is automatically installed when the user logs on. A downside to this approach is that any application you wish to install via Group Policy really needs to be in the form of a Windows Installer package (MSI, MSP, MSU, etc). You can still deploy non-Windows Installer applications but you need to create a ZAP file and you lose most of the functionality (such as having the software follow a user). It's also difficult to get that quick installation performed and verified. Generally speaking, you're going to wait a little while for your deployment to complete.

SMS / SCCM

If you are a licensed user of SMS / SCCM then you get the excellent SMS Installer application. SMS Installer is basically version 5 of the old Wise Installer. With SMS Installer you can create custom packages or combine multiple applications into one deployment. You can take a "before" snapshot of your computer, install an application, customize that application and then take an "after" snapshot. The changes that comprise the application are detected and the necessary files, registry modifications, INI changes etc are "packaged" up into a nice EXE. Using this method you ultimately have excellent control over how applications are installed. A key strength to using the SMS Installer is found when you need to deploy software that does not offer "Silent" or "Quiet" installations.

A downside to using SMS is the cost and complexity. Site servers. Distribution Point servers. Advertisement creation... it's a whole production.

Admin Arsenal

Admin Arsenal provides a quick and easy way to deploy software. Once you provide the installation media and the appropriate command line arguments the deployment is ready to begin. The strength is the ease and speed of deployment. No extra servers are needed. No need to repackage existing installations. A downside to Admin Arsenal is that it if the application you want to deploy does not have the ability to run in silent or quiet mode (this limitation is occasionally found in freeware or shareware) then you need to take a few extra steps to deploy.

Most applications now-a-days allow for silent or quiet installations. If your deployment file ends in .MSI, .MSU or .MSP then you know the silent option is available. Most files that end in .EXE allow for a silent installation.

Refer to Adam's excellent blog entry called the 5 Commandments of Software Deployment.

Disclaimer: I currently work for Admin Arsenal, so my objectivity can and should be taken into consideration. There are many solutions commercially available for deploying software. Take a dip in the pool. Find what works for you. If the software has a trial period, put it to the test. There are solutions for just about every need and budget. Feel free to shoot questions to me about your needs or current deployment headaches.

Shawn Anderson, one of the guys who works there (and a frequent reader here on Standalone Sysadmin), took me up on my offer of hosting guest blog spots, and asked if I would host something written by the Admin Arsenal staff. I agreed, under the condition that the entry wasn't a commercial disguised as a blog entry. Of course their product is mentioned in this entry, but I don't feel that it is over the top or out of place.

The topic we discussed was remote software installation on Windows, something that has always seemed like black magic to me, someone who has no Windows background, and I figured it would be something that many of you would be interested in as well.

In the interest of full disclosure, I should say that I am not getting paid or reimbursed in any way for this blog entry. If you feel that allowing companies (even companies with blogs that I enjoy) submit guest blogs, say so in the comments. In the end, this is ultimately my blog, but I'm not so stubborn as to not listen to wise counsel.

Let me just reiterate here that anyone who has a topic of interest and wants to do a guest blog is welcome to drop me a line. The chances are great that I'll be very happy to host your work, and that many people would love to read it.

Without further ado, here's the guest entry from Admin Arsenal!

|

Ren McCormack says that Ecclesiastes assures us that "there is a time for every purpose under heaven. A time to laugh (upgrading to Windows 7), a time to weep (working with Vista), a time to mourn (saying goodbye to XP) and there is a time to dance." If you haven't seen Footloose then you have a homework assignment. Go rent it. Now.

OK, 80's movie nostalgia aside, let's talk about the "dance". Deployment. Almost every system admin knows the pain of having to deploy software to dozens or hundreds or even thousands of systems. Purchasing deployment tools can get very pricey and learning how to use the new tools can be overwhelming especially if you are new to the world of Software Deployment. Here are a few tips to help you in your Software Deployment needs.

Group Policy

Deploying software via Group Policy is relatively easy and has some serious benefits. If you have software that needs to "follow" a user then Group Policy is the way to go. As particular users move from computer to computer you can be certain that the individual software needed is automatically installed when the user logs on. A downside to this approach is that any application you wish to install via Group Policy really needs to be in the form of a Windows Installer package (MSI, MSP, MSU, etc). You can still deploy non-Windows Installer applications but you need to create a ZAP file and you lose most of the functionality (such as having the software follow a user). It's also difficult to get that quick installation performed and verified. Generally speaking, you're going to wait a little while for your deployment to complete.

SMS / SCCM

If you are a licensed user of SMS / SCCM then you get the excellent SMS Installer application. SMS Installer is basically version 5 of the old Wise Installer. With SMS Installer you can create custom packages or combine multiple applications into one deployment. You can take a "before" snapshot of your computer, install an application, customize that application and then take an "after" snapshot. The changes that comprise the application are detected and the necessary files, registry modifications, INI changes etc are "packaged" up into a nice EXE. Using this method you ultimately have excellent control over how applications are installed. A key strength to using the SMS Installer is found when you need to deploy software that does not offer "Silent" or "Quiet" installations.

A downside to using SMS is the cost and complexity. Site servers. Distribution Point servers. Advertisement creation... it's a whole production.

Admin Arsenal

Admin Arsenal provides a quick and easy way to deploy software. Once you provide the installation media and the appropriate command line arguments the deployment is ready to begin. The strength is the ease and speed of deployment. No extra servers are needed. No need to repackage existing installations. A downside to Admin Arsenal is that it if the application you want to deploy does not have the ability to run in silent or quiet mode (this limitation is occasionally found in freeware or shareware) then you need to take a few extra steps to deploy.

Most applications now-a-days allow for silent or quiet installations. If your deployment file ends in .MSI, .MSU or .MSP then you know the silent option is available. Most files that end in .EXE allow for a silent installation.

Refer to Adam's excellent blog entry called the 5 Commandments of Software Deployment.

Disclaimer: I currently work for Admin Arsenal, so my objectivity can and should be taken into consideration. There are many solutions commercially available for deploying software. Take a dip in the pool. Find what works for you. If the software has a trial period, put it to the test. There are solutions for just about every need and budget. Feel free to shoot questions to me about your needs or current deployment headaches.

Wednesday, May 27, 2009

Followup to slow SAN speed

I mentioned this morning that I was having slow SAN performance. I said that I'd post an update if I figured out what was wrong, and I did.

EMC AX4-5's with a single processor have a known issue, apparently. Since there's only one storage controller on the unit, it intentionally disables write cache. Oops. I didn't even have to troubleshoot with the engineer. It was pretty much "Do you only have one processor in this?" "yes" "Oh, well, there's your problem right there".

So yea, if you're thinking about an EMC AX4-5, make sure to pony up the extra cash...err..cache..err..whatever.

EMC AX4-5's with a single processor have a known issue, apparently. Since there's only one storage controller on the unit, it intentionally disables write cache. Oops. I didn't even have to troubleshoot with the engineer. It was pretty much "Do you only have one processor in this?" "yes" "Oh, well, there's your problem right there".

So yea, if you're thinking about an EMC AX4-5, make sure to pony up the extra cash...err..cache..err..whatever.

SAN performance issues with the new install

So, you would think that 12 spindles would be...you know...fast.

Even if you took 12 spindles, made them into a RAID 10 array, they'd still be fast.

That's what I'd think, anyway. It turns out, my new EMC AX4 is having a bit of a problem. None of the machines hooked to it can reach > 30MB/s (which is 240Mb/s in bandwidth-talk). I haven't been able to determine the bottleneck yet, either.

I've discounted the switch. The equipment is nearly identical to that in the existing primary site (using SATA in the backup rather than SAS, but still, 30MB?). It isn't the cables. Port speeds read correctly (4GB from the storage array, 2GB from the servers).

The main difference is that the storage unit only has one processor, but I can't bring myself to believe that one processor can't push/pull over 30MB/s. I originally had it arranged as RAID 6, and I thought that maybe the two checksum computations were too much for it, but now with RAID 10, I'm seeing the same speed, so I don't think the processor is the bottleneck.

I'm just plain confused. This morning I'm going to be calling EMC. I've got a case open with their tech support, so hopefully I'll be able to get to the bottom of it. If it's something general, I'll make sure to write about it. If it's the lack of a 2nd storage processor, I'll make sure to complain about it. Either way, fun.

Even if you took 12 spindles, made them into a RAID 10 array, they'd still be fast.

That's what I'd think, anyway. It turns out, my new EMC AX4 is having a bit of a problem. None of the machines hooked to it can reach > 30MB/s (which is 240Mb/s in bandwidth-talk). I haven't been able to determine the bottleneck yet, either.

I've discounted the switch. The equipment is nearly identical to that in the existing primary site (using SATA in the backup rather than SAS, but still, 30MB?). It isn't the cables. Port speeds read correctly (4GB from the storage array, 2GB from the servers).

The main difference is that the storage unit only has one processor, but I can't bring myself to believe that one processor can't push/pull over 30MB/s. I originally had it arranged as RAID 6, and I thought that maybe the two checksum computations were too much for it, but now with RAID 10, I'm seeing the same speed, so I don't think the processor is the bottleneck.

I'm just plain confused. This morning I'm going to be calling EMC. I've got a case open with their tech support, so hopefully I'll be able to get to the bottom of it. If it's something general, I'll make sure to write about it. If it's the lack of a 2nd storage processor, I'll make sure to complain about it. Either way, fun.

Tuesday, May 26, 2009

Monitor Dell Warranty

A post over at Everything Sysadmin pointed me to an excellent Nagios plugin: Monitor Dell Warranty Expiration. Great idea!

That was a well needed break

As of last Wednesday, I had gone 31 days with only one day off in the preceding month. I was burned out. Fortunately, this coincided with my friends coming to visit my wife and I from Ohio, so this gave me an excellent opportunity to deman^H^H^H^H^Hrequest time off, and they gave it to me.

For the past 5 days or so, I've been off and running around the NY/NJ area, doing all sorts of touristy things with our friends, and just exploring NYC like I haven't had the chance to before. We spent an entire day at the American Museum of Natural History, and I finally got to go to the Hayden Planetarium to see Cosmic Collisions. It was absolutely worth it, and I'm so glad I picked up a membership on my first visit.

I finally got to go visit Liberty Island and see the statue. It was great, very impressive. After July 4th, they're going to be opening up the crown, but until then, you can only go to the top of the pedestal. It was still a great view.