Tom Limoncelli has a post up today entitled System administration needs more PhDs.

He makes some great observations and brings up a lot of interesting questions. The one that I think the others flow from is "Why are good practices so rarely adopted?"

My opinion, gained through observation, is that sysadmins arise from one of two places. Either they start out in relative isolation, or they come from an environment with multiple systems administrators.

The former develop their own ways of doing things through trial and error and/or research. This leads to endless ways of accomplishing the same or similar tasks. The utter heterogeneity of possible platform combinations lends itself to having each admin reinvent the wheel.

The latter typically have an established infrastructure in place, a well defined set of hardware, and a much more rigid structure of procedures and usually a bona fide methodology for change management.

The reason that the standalone sysadmin almost never resembles the well trained sysadmin is because best practices all seem to be vendor driven, reliant on a subset of devices and situations, and are hidden as well as possible behind support and contract agreements.

Those are hurdles the lone sysadmin faces AFTER he has discovered the "optimal solution", whatever that is. You mention puppet. Should you use cfengine or puppet? Unless you know about puppet, you'll use cfengine, unless you haven't heard of that either, in which case you'll roll your own. In my experience, you'll find $betterSolution right as you're implementing $bestSolutionYouKnowAbout.

I don't know whether there are more sysadmins in a single environment than in a plurality, but there are a _lot_ of sysadmins out there by themselves.

By themselves, sysadmins rely on their own cleverness, but together you get a synergy of ideas. The whole becomes smarter than the sum of the individuals, but most sysadmins never get to experience that. That's one of the reasons I started my blog. To shed light on what other people are doing, how they operate in their organizations, and so on.

Your books are a great resource for sysadmins, but the lone sysadmins of the world need to start communicating between themselves, and with the "institutional" admins out there. The same solution won't always work, but the sharing will go a way toward a meritocracy of

ideas.

Friday, October 31, 2008

Thursday, October 30, 2008

Dell's DRAC card sucks

I've worked with the my 1855 blade enclosure for a while, now, and I feel pretty confident in saying the following:

A) Dell's DRAC is a very useful device which facilitates remote administration

B) at least it would, if it didn't suck so much

The blade enclosure comes with a DRAC module that is inserted into a slot in the back. It's paired with an avocent KVM module connected to the blade units. You access the KVM through the DRAC web site, which is the real problem.

Every Dell technician I've complained to has said the same thing. "Yes, the DRAC is slow. Very slow, and underpowered". It's not just slow, it vacillates between borderline and completely unusable. On a good day, expect 3 minutes for the page to load. On a bad day, don't expect to load the whole page.

It also seems to occasionally lose track of the KVM. It's happened a few times so far, and there doesn't seem to be any reason for it. Either the DRAC will see the KVM and not be able to administer it (like what is happening right now), or the DRAC won't see the KVM at all.

It is very frustrating, and Dell's techs seem apologetic, but there doesn't appear to be a fix for it. It just sucks.

Next time I'm headed into the colocation, I'm just hooking the console port into the KVM that is connected to the non-blade servers. At least that way I'm not reliant on Dell's sorry excuse for a controller to access the video on my servers.

A) Dell's DRAC is a very useful device which facilitates remote administration

B) at least it would, if it didn't suck so much

The blade enclosure comes with a DRAC module that is inserted into a slot in the back. It's paired with an avocent KVM module connected to the blade units. You access the KVM through the DRAC web site, which is the real problem.

Every Dell technician I've complained to has said the same thing. "Yes, the DRAC is slow. Very slow, and underpowered". It's not just slow, it vacillates between borderline and completely unusable. On a good day, expect 3 minutes for the page to load. On a bad day, don't expect to load the whole page.

It also seems to occasionally lose track of the KVM. It's happened a few times so far, and there doesn't seem to be any reason for it. Either the DRAC will see the KVM and not be able to administer it (like what is happening right now), or the DRAC won't see the KVM at all.

Next time I'm headed into the colocation, I'm just hooking the console port into the KVM that is connected to the non-blade servers. At least that way I'm not reliant on Dell's sorry excuse for a controller to access the video on my servers.

Wednesday, October 29, 2008

A couple of amusing videos...

This is hilarious...I can't believe I only just found this

The Website Is Down

and..I...I'm not even sure what to say to this....

why you should give your sysadmin a day off

The Website Is Down

and..I...I'm not even sure what to say to this....

why you should give your sysadmin a day off

Tuesday, October 28, 2008

Outsourcing your web hosting

I found this link on Reddit today, and I thought that some of you may be shopping around for hosting. 4 things your web host doesn't want you to know

At one point in time, we were looking at a hosted space away from our (then pitiful) primary and backup sites, the idea being that as a last case scenario, we could redirect users to that site which stated that we were having issues.

Eventually we got to the point that we felt comfortable with our data sites and canceled the hosting, but if you don't currently have a backup solution, a cheap parked host somewhere might not be a terrible idea.

Here's a page on how to choose a web host. There are many similar pages

At one point in time, we were looking at a hosted space away from our (then pitiful) primary and backup sites, the idea being that as a last case scenario, we could redirect users to that site which stated that we were having issues.

Eventually we got to the point that we felt comfortable with our data sites and canceled the hosting, but if you don't currently have a backup solution, a cheap parked host somewhere might not be a terrible idea.

Here's a page on how to choose a web host. There are many similar pages

Monday, October 27, 2008

The weather outside is frightful...

but the warm air blowing out of the back of the servers is so delightful...

It snowed on my way into work today. So of course, during my drive, I thought of all the things I was going to have to start doing. Among them was annual maintenance on the work generator.

Typically, maintenance on a largish generator is done on a per-hours-run basis. My manual says to check the oil every 8 hours of running, change the oil every 100 hours, and change the spark plugs every 500 hours. There are other things that should be done annually, however, and for those, we hire a local contractor to come over and take care of things.

In central Ohio, we can have nasty winters, but they don't typically start early with the bad storms. Since the daylight savings time has migrated to the first Sunday of November (this year, Nov 2nd), it makes a great reminder for me to schedule the service call. People in different climes may have to use different milestones, but whatever you use, use something.

I wrote in June about a generator failure that I don't want to experience again, so learn from my mistake and perform regular maintenance on your equipment.

If you haven't done it yet, now is as good a time as any!

It snowed on my way into work today. So of course, during my drive, I thought of all the things I was going to have to start doing. Among them was annual maintenance on the work generator.

Typically, maintenance on a largish generator is done on a per-hours-run basis. My manual says to check the oil every 8 hours of running, change the oil every 100 hours, and change the spark plugs every 500 hours. There are other things that should be done annually, however, and for those, we hire a local contractor to come over and take care of things.

In central Ohio, we can have nasty winters, but they don't typically start early with the bad storms. Since the daylight savings time has migrated to the first Sunday of November (this year, Nov 2nd), it makes a great reminder for me to schedule the service call. People in different climes may have to use different milestones, but whatever you use, use something.

I wrote in June about a generator failure that I don't want to experience again, so learn from my mistake and perform regular maintenance on your equipment.

If you haven't done it yet, now is as good a time as any!

Thursday, October 23, 2008

Multiple privilege levels in IOS

I knew it was possible to set up multiple privilege levels in IOS, I just never had the gumption to research how. It's not a topic that comes up a lot, just something that sort of sat in the back of my mind making me wonder how they did that.

If you're in the same boat as me, wonder no longer. A tutorial from ciscozine has you covered.

Just thought I'd throw this out there since I had always wondered

If you're in the same boat as me, wonder no longer. A tutorial from ciscozine has you covered.

Just thought I'd throw this out there since I had always wondered

Issue remote commands to Windows machines without installing ssh

The other day, I ran into a new (to me) blog that I'm going to start reading. It's called Boiling Linux and Windows (they also do some AIX). If you're a cross platform admin like I am, it's probably worth your while to check it out. I know I'm very light in Windows experience, so it's an educational site for me.

Anyway, last week they covered running commands on a remote host from a Windows machine to another Windows machine. The tool used is psexec, which seems like a combination of rcp and rsh. I say that because I don't actually think the communication is encrypted, according to this conversation. Still, it's an interesting idea.

Anyway, last week they covered running commands on a remote host from a Windows machine to another Windows machine. The tool used is psexec, which seems like a combination of rcp and rsh. I say that because I don't actually think the communication is encrypted, according to this conversation. Still, it's an interesting idea.

Wednesday, October 22, 2008

Outage Statistics

I started this blog to share information, and I love it when other people do the same thing. In our industry, it's often hard to come by statistical information regarding infrastructure issues, at least until they happen to us. To save us some time, Michael Janke has posted the last two years worth of network outage analysis over at his blog.

I won't post spoilers, but it's an interesting read that you should definitely check out.

I won't post spoilers, but it's an interesting read that you should definitely check out.

Tuesday, October 21, 2008

Does 'onboarding' sound like 'waterboarding' to anyone else?

The company I work for is small. Small enough that there's no HR department, and no standard procedure for bringing on new hires, or in the irritating parlance of the day, 'onboarding'. Given that we're about to bring several people in soon, something needs to be done to plan for it, so I'm going to take care of it.

I'm working on a standardized method for getting the person set up from the IT perspective, and I'm going to be getting input from the one HR-ish person who deals with everything like that. I think it'll make things a lot smoother, especially when it comes to ordering licenses and hardware, and setting up accounts and so forth. I don't think I'm to the point that everything can be scripted, but I'm getting a bit closer.

I also want to produce some documentation that educates the new employee about the internal processes and jargon. It's complex to the point that it takes most people a year to pick up the various interconnections between systems. I think I can make a massive cut in the time by providing diagrams and documentation. At the very least, it hasn't been tried before, so I think it would be an improvement.

Anyone want to share what kind of..."onboarding" *shudder* procedures they have at their business?

I'm working on a standardized method for getting the person set up from the IT perspective, and I'm going to be getting input from the one HR-ish person who deals with everything like that. I think it'll make things a lot smoother, especially when it comes to ordering licenses and hardware, and setting up accounts and so forth. I don't think I'm to the point that everything can be scripted, but I'm getting a bit closer.

I also want to produce some documentation that educates the new employee about the internal processes and jargon. It's complex to the point that it takes most people a year to pick up the various interconnections between systems. I think I can make a massive cut in the time by providing diagrams and documentation. At the very least, it hasn't been tried before, so I think it would be an improvement.

Anyone want to share what kind of..."onboarding" *shudder* procedures they have at their business?

Is RAID 5 a risk with higher drive capacities?

There's a very interesting discussion going on over at ZDNet about RAID5 and hard drive capacities. The premise of the discussion is that unrecoverable read errors are uncommon, but statistically, we're approaching disk sizes where it will start to matter. Here's a quote from the blog entry:

"SATA drives are commonly specified with an unrecoverable read error rate (URE) of 10^14. Which means that once every 100,000,000,000,000 bits, the disk will very politely tell you that, so sorry, but I really, truly can’t read that sector back to you. One hundred trillion bits is about 12 terabytes. Sound like a lot? Not in 2009."

That would mean a bad block when trying to read. It wouldn't be such a problem, except when it happens while you're rebuilding a RAID array after a drive failure. New drive failures are 3% for each of the first three years, after that, the rates rise quickly, according to that author and Google, who he referenced for the numbers.

So the problem becomes a RAID 5 array with a drive failure. Pull the disk out, add a new one in, and the array has to rebuild. Once every 12TB on average, that rebuild will fail, according to statistics.

Commenters have pointed out that the loss of a single block doesn't necessarily mean the array can't rebuild, just that the non-redundancy means loss of that particular bit of data. With backups, you can restore the individual file and have a functioning array. I think it would depend on the controller, but I don't have any data to back that up.

The author argues in favor of more redundant RAID mechanisms. RAID 6 can tolerate the loss of two drives, and other raids can lose even more, depending on the particular failure.

Just the other day, I had a RAID 0 fail, but that was from the controller dying. Have you ever had an array die during rebuild? How traumatic was it, and did you have a backup available to recover?

Also, if you could use a RAID refresher, I mentioned them a while back.

"SATA drives are commonly specified with an unrecoverable read error rate (URE) of 10^14. Which means that once every 100,000,000,000,000 bits, the disk will very politely tell you that, so sorry, but I really, truly can’t read that sector back to you. One hundred trillion bits is about 12 terabytes. Sound like a lot? Not in 2009."

That would mean a bad block when trying to read. It wouldn't be such a problem, except when it happens while you're rebuilding a RAID array after a drive failure. New drive failures are 3% for each of the first three years, after that, the rates rise quickly, according to that author and Google, who he referenced for the numbers.

So the problem becomes a RAID 5 array with a drive failure. Pull the disk out, add a new one in, and the array has to rebuild. Once every 12TB on average, that rebuild will fail, according to statistics.

Commenters have pointed out that the loss of a single block doesn't necessarily mean the array can't rebuild, just that the non-redundancy means loss of that particular bit of data. With backups, you can restore the individual file and have a functioning array. I think it would depend on the controller, but I don't have any data to back that up.

The author argues in favor of more redundant RAID mechanisms. RAID 6 can tolerate the loss of two drives, and other raids can lose even more, depending on the particular failure.

Just the other day, I had a RAID 0 fail, but that was from the controller dying. Have you ever had an array die during rebuild? How traumatic was it, and did you have a backup available to recover?

Also, if you could use a RAID refresher, I mentioned them a while back.

Monday, October 20, 2008

Unexpected source of knowledge

Well, I never thought I'd find something useful there, but sure enough, the Something Awful forum has a section called Serious Hardware / Software Crap, and it should be noted that Something Awful isn't generally work-safe.

Today, thanks to Chris Siebenmann's wiki blog, I was searching for more information on SANs and I chanced on this thread, entitled "Why's my NAS a SAN?". It's actually a good source of information, particularly the first post, if you haven't quite gotten the hang of the how/why of Storage Area Networks.

So if you've got time and you can take the occasional childish behavior, add Something Awful to your list of forums to look through.

Today, thanks to Chris Siebenmann's wiki blog, I was searching for more information on SANs and I chanced on this thread, entitled "Why's my NAS a SAN?". It's actually a good source of information, particularly the first post, if you haven't quite gotten the hang of the how/why of Storage Area Networks.

So if you've got time and you can take the occasional childish behavior, add Something Awful to your list of forums to look through.

Saturday, October 18, 2008

Changes in things around here

Hi!

I've heard from more than one person that they were unable to comment here because of the CAPTCHA method that blogger uses. Apparently this was well known to many people that weren't me. Ironically, this affects people who have enabled certain protections to make browsing more secure, or in the words of one commenter, the very people I want commenting on my entries.

To this end, effective Friday, I've disabled CAPTCHAs on my comments. If you haven't been able to comment here because of them, you will be fine now. There's always the risk of comment spam, but I'd rather deal with that than not have people commenting who want to.

This blog has become far more successful than I could have imagined when I started it in May. According to Google Reader, I have 191 subscribers, just from that one RSS reader. According to Google Analytics, over 36,000 individual people have visited through the 6 months I've been writing. And if you're curious, Adsense has garnered [EDIT] not as much as you'd suspect ;-)

To everyone who keeps coming back to read what I write and to contribute to the discussions, thank you very much.

In the long term, I've been thinking that I may not stay at blogger for a whole lot longer. I haven't decided what software I would end up using, but I'm open to suggestion. Like most sysadmins, I don't have a lot of time to configure the website, which is why I picked blogger in the beginning. Anyway, that is in the future. Effectively shortly, www.standalone-sysadmin.com will point to this site, and will be sure to follow whereever the blog goes. Nothing will take you by surprise, though, as I'll write about major changes before I put them into effect.

So that's what's going on. No more captchas, new way to get here (http://www.standalone-sysadmin.com), and I'm shopping around for other blogging software.

If you've got any questions or feedback, please comment below, or send me an email to standalone.sysadmin@gmail.com. Thanks!

I've heard from more than one person that they were unable to comment here because of the CAPTCHA method that blogger uses. Apparently this was well known to many people that weren't me. Ironically, this affects people who have enabled certain protections to make browsing more secure, or in the words of one commenter, the very people I want commenting on my entries.

To this end, effective Friday, I've disabled CAPTCHAs on my comments. If you haven't been able to comment here because of them, you will be fine now. There's always the risk of comment spam, but I'd rather deal with that than not have people commenting who want to.

This blog has become far more successful than I could have imagined when I started it in May. According to Google Reader, I have 191 subscribers, just from that one RSS reader. According to Google Analytics, over 36,000 individual people have visited through the 6 months I've been writing. And if you're curious, Adsense has garnered [EDIT] not as much as you'd suspect ;-)

To everyone who keeps coming back to read what I write and to contribute to the discussions, thank you very much.

In the long term, I've been thinking that I may not stay at blogger for a whole lot longer. I haven't decided what software I would end up using, but I'm open to suggestion. Like most sysadmins, I don't have a lot of time to configure the website, which is why I picked blogger in the beginning. Anyway, that is in the future. Effectively shortly, www.standalone-sysadmin.com will point to this site, and will be sure to follow whereever the blog goes. Nothing will take you by surprise, though, as I'll write about major changes before I put them into effect.

So that's what's going on. No more captchas, new way to get here (http://www.standalone-sysadmin.com), and I'm shopping around for other blogging software.

If you've got any questions or feedback, please comment below, or send me an email to standalone.sysadmin@gmail.com. Thanks!

Thursday, October 16, 2008

Amanda Backup Suite

I've been contemplating how I'm going to handle backups once my current office goes dark. I'll be moved to NJ at that point, and in the NJ office. The tape changer and backup server will have to be located there, along with a decent amount of disk storage. The hard part is always the logistics.

My current backup scheme is not ideal, at least in the way that it accomplishes the backups. The scripts sometimes fail, in many cases have been coded in haste, and are, in a word, kludgy.

By happenstance, Locutus at the IT Toolbox wrote today about Amanda, a commandline based full featured backup solution capable of being configured to do wonderful things.

Anyone have experience with this, or have another backup solution they know and love?

My current backup scheme is not ideal, at least in the way that it accomplishes the backups. The scripts sometimes fail, in many cases have been coded in haste, and are, in a word, kludgy.

By happenstance, Locutus at the IT Toolbox wrote today about Amanda, a commandline based full featured backup solution capable of being configured to do wonderful things.

Anyone have experience with this, or have another backup solution they know and love?

Setting up Monitoring

In the relative calm of today, I've been working on getting a graphing solution up and running. I had to decide whether I was going to use the all-in-one solution of Zenoss. They even have a commercial release for support and additional plugins if I needed to go that route.

I might still be inclined to go that route if I didn't already have a working Nagios config. I've put a fair amount of work into learning the ins and outs of the configuration, so I don't want to lose that braintrust. I'm used to how it works, and I like it.

That leaves the vacuum of graphing and trending. I used to use MRTG, but because of the way it works, it bogs down the machine way too much. It generates every graph every time it receives the information, which it retrieves every 5 minutes. That's a lot of graphs I'm never going to look at, and eventually it takes longer than 5 minutes to generate the graphs each time.

To fix this, I'm going with Cacti. It's based on RRDTool, which was written by Tobi Oetiker after he wrote MRTG. Cacti has a very neat option, called CactiEZ, which is a bootable ISO which installs a CentOS 4 based OS that does all the installation and initial configuration for you. I just installed it in a VM and it was very smooth. Even has webmin all set up for you and ready. All you've got to do is configure your devices. It's slick.

I think that I'm going to end up recreating the Nagios configuration from scratch. There's a lot I've learned since I first set it up, and I think I can streamline the configuration. One key I'm missing from this is a tool to modify the nagios config for me. It really is a pain in the butt sometimes, and it's screaming for a web-based tool to take care of it, I just haven't seen one, and now that Nagios v3 is out, I'm sure it'll be forever for the few tools out there to update. I think I'll be stuck doing it by hand.

I might still be inclined to go that route if I didn't already have a working Nagios config. I've put a fair amount of work into learning the ins and outs of the configuration, so I don't want to lose that braintrust. I'm used to how it works, and I like it.

That leaves the vacuum of graphing and trending. I used to use MRTG, but because of the way it works, it bogs down the machine way too much. It generates every graph every time it receives the information, which it retrieves every 5 minutes. That's a lot of graphs I'm never going to look at, and eventually it takes longer than 5 minutes to generate the graphs each time.

To fix this, I'm going with Cacti. It's based on RRDTool, which was written by Tobi Oetiker after he wrote MRTG. Cacti has a very neat option, called CactiEZ, which is a bootable ISO which installs a CentOS 4 based OS that does all the installation and initial configuration for you. I just installed it in a VM and it was very smooth. Even has webmin all set up for you and ready. All you've got to do is configure your devices. It's slick.

I think that I'm going to end up recreating the Nagios configuration from scratch. There's a lot I've learned since I first set it up, and I think I can streamline the configuration. One key I'm missing from this is a tool to modify the nagios config for me. It really is a pain in the butt sometimes, and it's screaming for a web-based tool to take care of it, I just haven't seen one, and now that Nagios v3 is out, I'm sure it'll be forever for the few tools out there to update. I think I'll be stuck doing it by hand.

Wednesday, October 15, 2008

When I asked for "normal", this is not what I meant!

Of course, just as I'm settling into my day yesterday, I get a message from one of the operations people, "the primary FTP site isn't working".

I log in and check it, and he's right. The port is open, but no one's home. I ssh'd in fine, the daemon is running, and things seem fine. Of course I checked the logs at that point, and there wasn't an error. In fact, there wasn't a log entry past 12:40am.

I've had things like this happen before, and when even the logging dies, your disk is probably full. df -h said this:

Everything looks fine there, but something was obviously wrong. Time to check /var/log/messages

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: scsi : Device offlined - not ready after error recovery

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: scsi : Device offlined - not ready after error recovery

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: SCSI error : return code = 0x00000002

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: SCSI error : return code = 0x00000002

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: SCSI error : return code = 0x00010000

Well, hell. That's not a good thing at all. Looking at the errors on the console, I determined that reiserfs (these are older slackware machines) driver had dispensed with the journal, and the drives were now read only.

This is the reason you have a backup server.

Remarkably, despite not being able to write to the disk at all, the client website still ran fine, since it's just an apache instance that forwards to an internal tomcat server. That bought us some time.

I verified that the backup was prepared, and coordinated with the operations people to swap the site to the new machine. We got the service swapped over, and I quickly configured a spare machine to act as the backup of the now-running production machine, and then took a look at the broken box.

As it turns out, the controller crapped out. It still won't boot at all, and it says the drives are "incomplete", whatever that means. In addition, I can't even get into the controller bios to reinitialize the array. Great.

So that's how yesterday went, and why there wasn't an entry for it :-) Hope yours went better.

I log in and check it, and he's right. The port is open, but no one's home. I ssh'd in fine, the daemon is running, and things seem fine. Of course I checked the logs at that point, and there wasn't an error. In fact, there wasn't a log entry past 12:40am.

I've had things like this happen before, and when even the logging dies, your disk is probably full. df -h said this:

# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda4 34G 3.9G 30G 12% /

/dev/sda2 1.9G 50M 1.9G 3% /var

/dev/sda3 1.9G 68M 1.9G 4% /tmp

Everything looks fine there, but something was obviously wrong. Time to check /var/log/messages

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: scsi : Device offlined - not ready after error recovery

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: scsi : Device offlined - not ready after error recovery

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: SCSI error : return code = 0x00000002

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: SCSI error : return code = 0x00000002

Oct 14 00:40:49 bv kernel: sd 0:0:0:0: SCSI error : return code = 0x00010000

Well, hell. That's not a good thing at all. Looking at the errors on the console, I determined that reiserfs (these are older slackware machines) driver had dispensed with the journal, and the drives were now read only.

This is the reason you have a backup server.

Remarkably, despite not being able to write to the disk at all, the client website still ran fine, since it's just an apache instance that forwards to an internal tomcat server. That bought us some time.

I verified that the backup was prepared, and coordinated with the operations people to swap the site to the new machine. We got the service swapped over, and I quickly configured a spare machine to act as the backup of the now-running production machine, and then took a look at the broken box.

As it turns out, the controller crapped out. It still won't boot at all, and it says the drives are "incomplete", whatever that means. In addition, I can't even get into the controller bios to reinitialize the array. Great.

So that's how yesterday went, and why there wasn't an entry for it :-) Hope yours went better.

Monday, October 13, 2008

Back to a normal week (hopefully)

I'm back in Ohio this week, and it's nice to have things return to a relative normal. On the personal front, we lowered the price of our house to get it to sell faster, and we've got some apartments in NJ that we're pretty sure we'd like to move into. We've just got to find someone who wants to buy our house now.

At work, my boss is driving up to NJ today, moving stuff from the office, so when I get there, I know it's going to look even more empty than it did.

On the technology side, we finally had to pull the plug on Redhat Cluster Suite. I don't think I've ever invested more of myself in trying to make something work, and still have it fail. I plan on publishing all of the work and configs that I did in the hopes that it helps someone else, but at this point, it's not stable enough for us to use in production. We kept getting the cluster hung on certain filesystems and even when it didn't hang, performance was unbelievably slow, even for doing an 'ls'. Anything having to do with metadata of more than a few dozen files froze for a long time, and trying to run 'find' on a clustered directory was enough to kill access to that volume. I suppose for something smaller than ~2million files, it might work. Next time I think we'll just get a NetApp. When you consider the amount of time I've spent working on this, it'll be cheaper.

To tell you the truth, I'm just shellshocked. I really did spend months on it, and I let my emotion get in the way of my judgement. If I had been looking at it from a detached viewpoint, I would have pulled the plug a long time ago, but I wanted it so bad that I kept hoping it would stabalize.

For an alternate solution, we're running active/passive server backups, where the backup server is alive, but doesn't have access to the volumes. I've had to do all kinds of key magic to make things work right with ssh, I'm going to have to write scripts to switch smoothly to the backup server. I'm sure there are other ramifications that will occur to me, too.

All in all, I'm really glad that last week is over.

At work, my boss is driving up to NJ today, moving stuff from the office, so when I get there, I know it's going to look even more empty than it did.

On the technology side, we finally had to pull the plug on Redhat Cluster Suite. I don't think I've ever invested more of myself in trying to make something work, and still have it fail. I plan on publishing all of the work and configs that I did in the hopes that it helps someone else, but at this point, it's not stable enough for us to use in production. We kept getting the cluster hung on certain filesystems and even when it didn't hang, performance was unbelievably slow, even for doing an 'ls'. Anything having to do with metadata of more than a few dozen files froze for a long time, and trying to run 'find' on a clustered directory was enough to kill access to that volume. I suppose for something smaller than ~2million files, it might work. Next time I think we'll just get a NetApp. When you consider the amount of time I've spent working on this, it'll be cheaper.

To tell you the truth, I'm just shellshocked. I really did spend months on it, and I let my emotion get in the way of my judgement. If I had been looking at it from a detached viewpoint, I would have pulled the plug a long time ago, but I wanted it so bad that I kept hoping it would stabalize.

For an alternate solution, we're running active/passive server backups, where the backup server is alive, but doesn't have access to the volumes. I've had to do all kinds of key magic to make things work right with ssh, I'm going to have to write scripts to switch smoothly to the backup server. I'm sure there are other ramifications that will occur to me, too.

All in all, I'm really glad that last week is over.

Tuesday, October 7, 2008

Other people's server room heat issues

Jack, over at The Tech Teapot, posted a link the other day to an amusing story from The Daily WTF (an excellent site for real-life tech humor), regarding someone's heat issues in a server closet.

Big Picture: How do you store old email?

The biggest hurdle that we, as systems administrators, have to deal with is that our time is finite, and thus our knowledge is incomplete. The development and growth of the internet has been a boon to people like ourselves, who seek to gain information. The advent of effective search engines goes far in presenting this information usefully and keeps it at our fingertips.

I've found that there are some things that search engines don't do so well on. You can search Google for how, technically, to archive email, but you can't search for what the best policy is. You can't grep the experience of other administrators unless they've written about what they've done, and you can't download memories unless they've been recorded and put online.

That's a large part of why I started this blog.

None of us have as much experience as all of us, and by working together, we amass a pool of information and experiences that can help others learn what we have, and hopefully by standing on shoulders, heads, and feet, eclipse us and our knowledge. And then we do the same to them.

It's a type of bootstrapping where we architect our own usurpers, then try to follow their example and surpass their achievements. Collective learning and pushing and growing. It's great to watch and experience, and if you're reading this blog, you're a part of it.

You know that I ask for help all the time. Password retention, project management software, even best practices for security policies. I'm not afraid to admit that I don't know something, or that I don't know how to do something. There's no shame in ignorance, there's just an opportunity to learn.

In that same vein, I thank you for all the help you have given me previously, and ask again for advice and experience.

I have users who currently store, and semi-regularly read, email up to 8-9 years old. All told, one of my users weighs in at 11GB of mail.

If you're a regular reader, you know I asked about hosted email providers the other day. We're going to be limited to having 4GB of mail per user. The act of storing every email which touches the server, as suggested by this email compliance document, is completely unfeasible. We have several users who all receive the same tens-of-megabytes files every day of the week from several clients. I would need a $100,000 storage system with data deduplication just to store a few years worth of mail.

Aside from being completely out of the financial ballpark, it would violate the concept of keeping your least volatile data on your most expensive storage.

This article has what sounds like good advice, but much of it is provided by people with vested interests in selling solutions. I want to hear from other admins who deal with this. Even if you don't deal with it, what ideas do you have as to how it should be done?

I've found that there are some things that search engines don't do so well on. You can search Google for how, technically, to archive email, but you can't search for what the best policy is. You can't grep the experience of other administrators unless they've written about what they've done, and you can't download memories unless they've been recorded and put online.

That's a large part of why I started this blog.

None of us have as much experience as all of us, and by working together, we amass a pool of information and experiences that can help others learn what we have, and hopefully by standing on shoulders, heads, and feet, eclipse us and our knowledge. And then we do the same to them.

It's a type of bootstrapping where we architect our own usurpers, then try to follow their example and surpass their achievements. Collective learning and pushing and growing. It's great to watch and experience, and if you're reading this blog, you're a part of it.

You know that I ask for help all the time. Password retention, project management software, even best practices for security policies. I'm not afraid to admit that I don't know something, or that I don't know how to do something. There's no shame in ignorance, there's just an opportunity to learn.

In that same vein, I thank you for all the help you have given me previously, and ask again for advice and experience.

I have users who currently store, and semi-regularly read, email up to 8-9 years old. All told, one of my users weighs in at 11GB of mail.

If you're a regular reader, you know I asked about hosted email providers the other day. We're going to be limited to having 4GB of mail per user. The act of storing every email which touches the server, as suggested by this email compliance document, is completely unfeasible. We have several users who all receive the same tens-of-megabytes files every day of the week from several clients. I would need a $100,000 storage system with data deduplication just to store a few years worth of mail.

Aside from being completely out of the financial ballpark, it would violate the concept of keeping your least volatile data on your most expensive storage.

This article has what sounds like good advice, but much of it is provided by people with vested interests in selling solutions. I want to hear from other admins who deal with this. Even if you don't deal with it, what ideas do you have as to how it should be done?

Monday, October 6, 2008

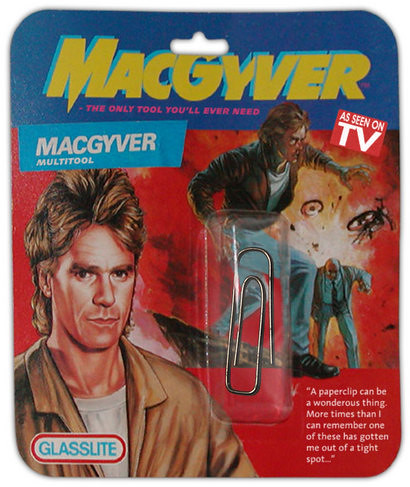

Foiling the dreaded zip-tie

So I was in a jam this weekend. I needed to remove some wire in the new rack. When it was installed, the colo people used plastic zip ties instead of velcro ties, I'm sure to save money.

I didn't have scissors or wire cutters, so I had to figure out a way to beat the zip tie. I thought about it, looked around for possible tools, couldn't find anything, so I sat and brainstormed about how I could beat it. I came up with the answer, and now I can open pretty much every zip tie (that I've tried so far) without scissors.

The key is to know how zip ties work. Essentially, one side of the plastic strip is smooth, and the other has teeth molded into it. In the square ring that you pull the strip through, there's a small piece of plastic down at an angle so that, when the plastic teeth are pulled one way, they slide right through, and when they're pulled against the grain, it catches and stops. It's much easier to show you a drawing of how it works:

Pull the plastic strip through, and it tightens the hold, because the tooth slides across the ridges.

Pull the other way, and the teeth catch, stopping the movement. To release the catch, all you need is a paper clip with one of the arms bent straight:

Push the paperclip in from the side you want to be able to pull towards, and fish it around until you find the tooth that's keeping it in place. Push forward on the tooth to lower it, while wedging the paperclip against the plastic strip and pulling to try to loosen the tie. Once you get it, it's pretty easy to keep the paperclip in place to open the whole loop, and release the end.

You might have already known this, and if so, I apologize for wasting so much time on it, but I was amazed that it worked, and it made me really, really happy to be able to get my cables loose without wire cutters.

Friday, October 3, 2008

Answer to Slow Speeds from last week

Thanks, everyone, for your input last week on my slow transfer speeds.

The winner was Jack, who suggested that compression might be causing my problems. Indeed, going from 20Mb/s to around 400Mb/s was as easy as removing the -z flag from rsync.

Jack, thank you very much, and I'm really, really sorry that I didn't take your advice sooner!

The winner was Jack, who suggested that compression might be causing my problems. Indeed, going from 20Mb/s to around 400Mb/s was as easy as removing the -z flag from rsync.

Jack, thank you very much, and I'm really, really sorry that I didn't take your advice sooner!

Thursday, October 2, 2008

Working in NJ

I'm up in the New Jersey corporate headquarters today, and will be all of next week, too.

I'm up here for general maintenance, and to fine tune the stack of equipment in the soon-to-be production rack.

I'm upgrading the switches from 24 port GbE Netgear to 48 port GbE 3com, which have a lot of added features that I'm hoping to take advantage of.

Since I'm usually working pretty constantly up here, my blog posts might become even more sparse than they were before, and maybe some guest bloggers will drop in next week.

As usual, I'll be available at standalone.sysadmin@gmail.com, or just comment on one of the posts.

By the way, I'm typing this on an Acer AL2416W 24" LCD. I have *got* to get one of these for myself.

I'm up here for general maintenance, and to fine tune the stack of equipment in the soon-to-be production rack.

I'm upgrading the switches from 24 port GbE Netgear to 48 port GbE 3com, which have a lot of added features that I'm hoping to take advantage of.

Since I'm usually working pretty constantly up here, my blog posts might become even more sparse than they were before, and maybe some guest bloggers will drop in next week.

As usual, I'll be available at standalone.sysadmin@gmail.com, or just comment on one of the posts.

By the way, I'm typing this on an Acer AL2416W 24" LCD. I have *got* to get one of these for myself.

Wednesday, October 1, 2008

They always said things come in threes

A while back we had the Kaminsky DNS bug

Then it was (re)brought to light that BGP had a big gaping security hole.

Now, the cherry on the whipped cream: A DoS Attack that can reportedly take down any TCP stack it's been tried on.

Terrific.

Then it was (re)brought to light that BGP had a big gaping security hole.

Now, the cherry on the whipped cream: A DoS Attack that can reportedly take down any TCP stack it's been tried on.

Terrific.

Link to 15 tips on how to setup your rack

Sorry I've been too busy to post this week. I did find this link that I thought you'd enjoy, though.

15 tips to properly setup your own hosting racks.

You don't necessarily have to be setting up hosting racks to use these tips. There isn't a lot of depth to the tips, necessarily, but they are good to keep in mind.

I also did a HOWTO on Racks and Rackmounting a while back that you might not have seen.

15 tips to properly setup your own hosting racks.

You don't necessarily have to be setting up hosting racks to use these tips. There isn't a lot of depth to the tips, necessarily, but they are good to keep in mind.

I also did a HOWTO on Racks and Rackmounting a while back that you might not have seen.

Subscribe to:

Posts (Atom)